My Research

2020

EGAD! an Evolved Grasping Analysis Dataset for diversity and reproducibility in robotic manipulation

Doug Morrison, Peter Corke, Juxi Leitner

IEEE Robotics and Automation Letters (RA-L). Accepted April, 2020.

We present the Evolved Grasping Analysis Dataset (EGAD), comprising over 2000 generated objects aimed at training and evaluating robotic visual grasp detection algorithms. The objects in EGAD are geometrically diverse, filling a space ranging from simple to complex shapes and from easy to difficult to grasp, compared to other datasets for robotic grasping, which may be limited in size or contain only a small number of object classes. Additionally, we specify a set of 49 diverse 3D-printable evaluation objects to encourage reproducible testing of robotic grasping systems across a range of complexity and difficulty.

2019

Doug Morrison, Peter Corke, Juxi Leitner

International Journal of Robotics Research (IJRR), June 2019

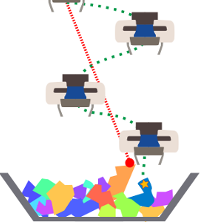

In this extended version of "Closing the Loop for Robotic Grasping" (RSS 2018), we

provide a more in-depth look at our real-time, generative grasp synthesis approach through

extended analysis, neural network design and a new multi-view approach to grasping.

Additionally, we extend our method to use the new Jacquard grasping dataset and

demonstrate the ease of transferring our platform-agnostic approach to a new robot.

Shortlist - SAGE HDR Student Publication Prize 2019

Doug Morrison, Peter Corke, Juxi Leitner

International Conference on Robotics and Automation (ICRA), 2019

Camera viewpoint selection is an important aspect of visual grasp detection, especially in clutter where many occlusions are present. Where other approaches use a static camera position or fixed data collection routines, our Multi-View Picking (MVP) controller uses an active perception approach to choose informative viewpoints based directly on a distribution of grasp pose estimates in real time, reducing uncertainty in the grasp poses caused by clutter and occlusions.

Doug Morrison, Anton Milan, Nontas Antonakos

CVPR 2019 Workshop - Robotic Vision Probabilistic Object Detection

In robotics, decisions made based on erroneous visual

detections can have disastrous consequences.

However, this uncertainty is often not captured

by classic computer vision systems or metrics.

We address the task of instance segmentation in a robotics

context, where we are concerned with uncertainty associated

with not only the class of an object (semantic uncertainty)

but also its location (spatial uncertainty).

4th Place - First ACRV Probabilistic Object Detection Challenge

2018

Doug Morrison, Peter Corke, Juxi Leitner

Robotics: Science and Systems (RSS), 2018

This paper presents a real-time, object-independent grasp synthesis method which can be used for closed-loop grasping. The lightweight and single-pass generative nature of our GG-CNN allows for closed-loop control at up to 50Hz, enabling accurate grasping in non-static environments where objects move and in the presence of robot control inaccuracies.

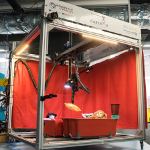

Doug Morrison, AW Tow, M McTaggart, R Smith, N Kelly-Boxall, S Wade-McCue, J Erskine, R Grinover, A Gurman, T Hunn, D Lee, A Milan, T Pham, G Rallos, A Razjigaev, T Rowntree, K Vijay, Z Zhuang, C Lehnert, I Reid, P Corke, J Leitner

International Conference on Robotics and Automation (ICRA), 2018

A system-level description of Cartman, our winning entry into the 2017 Amazon Robotics Challenge.

Finalist - Amazon Robotics Best Paper Awards in Manipulation 2018

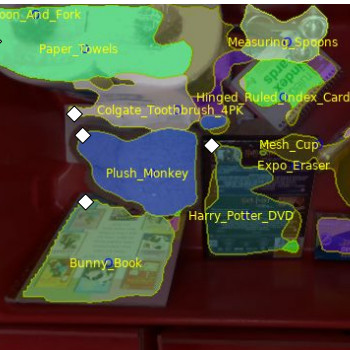

A. Milan, T. Pham, K. Vijay, D. Morrison, A.W. Tow, L. Liu, J. Erskine, R. Grinover, A. Gurman, T. Hunn, N. Kelly-Boxall, D. Lee, M. McTaggart, G. Rallos, A. Razjigaev, T. Rowntree, T. Shen, R. Smith, S. Wade-McCue, Z. Zhuang, C. Lehnert, G. Lin, I. Reid, P. Corke, J. Leitner

International Conference on Robotics and Automation (ICRA), 2018

We present our approach for robotic perception in cluttered scenes that led to winning the recent Amazon Robotics Challenge (ARC) 2017. In contrast to traditional approaches which require large collections of annotated data and many hours of training, the task here was to obtain a robust perception pipeline with only few minutes of data acquisition and training time. To that end, we present two strategies that we explored.

Norton Kelly-Boxall, Doug Morrison, Sean Wade-McCue, Peter Corke, Juxi Leitner

Australasian Conference on Robotics and Automation (ACRA), 2018

We present the grasping system behind Cartman, the winning robot in the 2017 Amazon Robotics Challenge. The system was designed using an integrated design methodology and makes strong use of redundancy by implementing

complimentary tools, a suction gripper

and a parallel gripper. This multi-modal

end-effector is combined with three grasp synthesis

algorithms to accommodate the range of

objects provided by Amazon during the challenge.

2017

Doug Morrison, Peter Corke, Jürgen Leitner

"Towards robust grasping and manipulation skills for humanoids" Workshop at the IEEE-RAS International Conference on Humanoid Robots, 2017

We present a generative grasping network which encodes a one-to-one mapping from the RGB-D image space to the grasping space, rather than relying on grasp candidate sampling.

Doug Morrison, Norton Kelly-Boxall, Sean Wade-McCue, Peter Corke, Jürgen Leitner

"Towards robust grasping and manipulation skills for humanoids" Workshop at the IEEE-RAS International Conference on Humanoid Robots, 2017

We present a heuristic-based grasp detection system which uses a hierarchy of three different strategies to operate successfully under varying levels of visual uncertainty.

2014

Doug Morrison, Udantha Abeyratne

Journal of Food Engineering 141 (2014)

A publication from my undergraduate thesis, nothing to do with robots. I built a custom, hand-held ultrasound device that could evaluate the quality of oranges by relating ultrasonic surface reflections to hydration and firmness.