About Doug

Doug Morrison

I'm a PhD researcher at the Australian Centre for Robotic Vision (ACRV) at the Queensland University of Technology (QUT) in Brisbane, Australia, supervised by Dr Juxi Leitner and Professor Peter Corke.

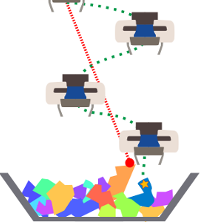

My research is developing new strategies for robotic grasping in the unstructured and dynamic environments of the real world, that is, strategies which are general, reactive and knowledgeable about their environments. The goal: create robots that can grasp objects anywhere, all the time.

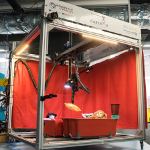

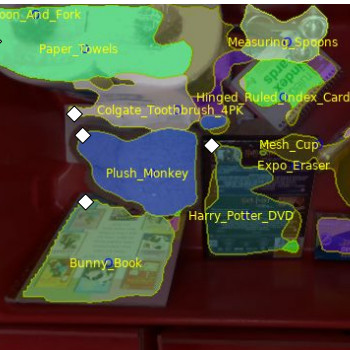

I was also one of the lead developers of Cartman, the ACRV's winning entry into the 2017 Amazon Robotics Challenge!

News

- April 2021 - EGAD! an Evolved Grasping Analysis Dataset was awarded a RA-L Best Paper Award for 2020

- May 2019 - I have been awarded as one of the Best Reviewers at ICRA 2019.

- Sep 2018 - Awarded as a Finalist in the Amazon Robotics Best Systems Paper Award in Manipulation 2018 for "Cartman: The Low-cost Cartesian Manipulator"

- Apr 2018 - I discuss robotic grasping and the ARC on NVIDIA's "The AI Podcast" [Listen Here]

- Mar 2018 - Cartman's packing his bags again and heading to GTC 2018 in Silicon Valley, where Juxi and I will be giving a presentation. [Slides]

- Jul 2017 - We won the Amazon Robotics Challenge 2017! Congratualtions to everyone involved!

Selected Publications

.